This guide will teach you how to build a powerful GraphQL API with Rust. You will use Rust and a few well-known libraries to create an HTTP server, add GraphQL support, and even implement a small API. After that, you will learn how to make this service ready for production by adding tracing and metrics, and then you will put everything inside a Docker container, ready to deploy.

GraphQL is a vital API technology these days. Facebook, Netflix, Spotify, Shopify, and many more prominent tech companies build and maintain such an API. Especially federated GraphQL APIs (these are APIs with an API gateway and many individual microservices composing one large supergraph) bring a lot of flexibility to the table. Teams can work independently while contributing to one single API surface that makes it easy for clients to use any backend functionality they might need at one single endpoint, all while being flexible enough only to query the data they really need.

The chances are high that you will encounter a task to implement a GraphQL API one day. Perhaps you already build GraphQL APIs and are looking for more exciting ways to build them. In any of these cases, this guide is for you.

(A quick note: If you get stuck while going through this guide, you can find the full code here.)

Table of Contents

Open Table of Contents

About Rust and GraphQL

GraphQL

GraphQL is an open-source data query and manipulation language for APIs and a runtime for fulfilling queries with existing data. It was initially developed at Facebook in 2012 and publicly released in 2016.

Nowadays, many companies like Netflix, Spotify, Shopify, and more build GraphQL APIs because the technology provides more flexibility to clients than traditional REST APIs can. Clients can specify which data they want to fetch, and only the data a client requests is ever returned from an API endpoint. Large JSON blobs with many unneeded fields are a thing of the past with GraphQL.

You can learn more about GraphQL here.

Rust

Rust is a statically typed systems programming language that compiles to machine code. Its speed at runtime is often on par with C and C++ while providing modern language constructs and a rich and mature ecosystem. With opinionated tooling and a rapidly growing ecosystem, Rust is a great candidate to consider when thinking about a new programming language to learn or build your systems.

You can learn more about Rust here.

Preparations

This tutorial assumes that you use a Unix-style terminal. If you are on Windows, please take a look at Windows Subsystem for Linux. Most of this tutorial should work if you decide to stay on Windows. You will just need to make sure to adjust your paths accordingly (Windows uses \ instead of / as a path separator, for example).

If you don’t have Rust installed yet, you can use rustup as the fastest way to install Rust, and all tooling you need to follow this article.

As soon as rustup is installed, or in case you already have a complete toolchain, make sure that you use at least Rust version 1.72.0.

If you are unsure which version you have installed, you can use the following commands:

❯ rustup showDefault host: aarch64-apple-darwinrustup home: /Users/oliverjumpertz/.rustup

installed toolchains--------------------

stable-aarch64-apple-darwin (default)nightly-aarch64-apple-darwin1.65.0-aarch64-apple-darwin1.70.0-aarch64-apple-darwin1.71.0-aarch64-apple-darwin1.71.1-aarch64-apple-darwin1.72.0-aarch64-apple-darwin

active toolchain----------------

stable-aarch64-apple-darwin (default)rustc 1.73.0 (cc66ad468 2023-10-03)

❯ rustc --versionrustc 1.73.0 (cc66ad468 2023-10-03)The last thing you need is an installation of Docker. Make sure you either have Docker Desktop (for Mac and Windows) or Docker (for Linux) itself installed.

Setting up the project

It’s time to start building your GraphQL API with Rust. Open your favorite terminal app and navigate to a folder you want your project to reside in.

Create a new folder for your project and jump right into it:

❯ mkdir axum-graphql

❯ cd axum-graphqlThe next thing you need to do is to use cargo to create a new Rust project:

❯ cargo init Created binary (application) packageCargo automatically creates a binary project and initializes a git repository for you. Your project folder should now look like this:

| Cargo.toml| .git/| .gitignore| src/ | main.rsThis setup is usually enough for a hobby project, but you want some stability, especially when building production-grade services.

If every developer has a different version of Rust installed, you can quickly encounter bugs that only occur on specific versions, for example. To circumvent this, Cargo has support to pin the version of Rust you want your project to be built with.

Create a new file and call it rust-toolchain.toml. Then insert the following content:

[toolchain]channel = "1.72.0"The line channel = "1.72.0" tells Cargo that whenever you run a cargo command, you want Cargo to ensure that you use Rust version 1.65.0. If the version is not activated or installed, it is automatically activated or installed for you.

The next thing you want to do is to ensure at least some level of code quality. Gladly, Rust decided very early in its lifetime to be opinionated. This means that there is one code style that is officially supported. This code style has fully been implemented in rustfmt, the official Rust formatter.

You can install rustfmt manually, but you would have to tell every other developer you potentially work with that they also need this component installed. This is why rust-toolchain.toml has support for so-called components (tools that can do a multitude of work, like formatting your code).

Add the following line components = ["rustfmt"] to your rust-toolchain.toml, which should make the whole file look like this now:

[toolchain]channel = "1.72.0"components = ["rustfmt"]With rustfmt added, you can now automatically format your code. Before you can continue, however, it makes sense to drop the tool a configuration file it can work with. Create a new file called .rustfmt.toml in your project and add the following content:

edition = "2021"newline_style = "Unix"This only tells rustfmt two things for now:

- The edition of Rust you use is 2021

- newlines should be formatted in Unix-style

- This becomes interesting as soon as you want to build inside a Docker container but work on Windows yourself, for example

If you want to see which other configuration options you have, you can look at a full list of settings here.

The last thing you need now is a linter. Most programming languages have ways to do things in one specific way (usually called idiomatic), and they also usually have common pitfalls. Rust is no different. Clippy is Rust’s linter that comes with over 550 linting rules. That is more than enough to ensure that you avoid common mistakes and make your code readable for other Rust developers.

Add another entry to the components within your rust-toolchain.toml called "clippy", which should make the file look like this now:

[toolchain]channel = "1.72.0"components = ["rustfmt", "clippy"]Clippy, like rustfmt, supports a configuration file where you can change settings for specific lints. Create another file called .clippy.toml inside your project and add the following content:

cognitive-complexity-threshold = 30

The line above tells clippy to allow a cognitive complexity of 30 for any method it analyzes (the more complex a method, the harder it becomes to read but also for the compiler to optimize). There are way more settings, of course, and you can take a look at the full list of available linter settings here.

With everything set up and configured, you now have two commands that help you to lint and format your code.

cargo fmt --all goes over your code and formats everything according to the official style guide and the rules you configure within .rustfmt.toml.

cargo clippy --all --tests takes care of linting your code.

This is everything you need for now as a foundation for your GraphQL API with Rust. It’s finally time to jump into the actual implementation.

Creating the web server

There are many web server implementations for Rust nowadays, and it’s often difficult to choose the right one. You will just use axum for your GraphQL API to make things easier. It is currently considered the best choice for any new project (no matter whether you need an HTTP, REST, or GraphQL API) within the community.

axum is a web framework that is created and maintained by the same developers who already build and maintain tokio (Rust’s oldest asynchronous runtime). It is fast, easy to use, and integrates perfectly into the tokio ecosystem, which makes it a solid choice for projects of any size.

The first step is to add two dependencies to your project. Open Cargo.toml and add the following dependencies:

- axum

- tokio

Your Cargo.toml should look like this now:

[package]edition = "2021"name = "axum-graphql"version = "0.1.0"

[dependencies]axum = "0.6.20"tokio = { version = "1.32.0", features = ["full"] }Now open src/main.rs and replace its content with the following code:

use axum::{Router, Server};

#[tokio::main] // (1)async fn main() { let app = Router::new(); // (2)

Server::bind(&"0.0.0.0:8000".parse().unwrap()) // (3) .serve(app.into_make_service()) .await .unwrap();}Let’s take a quick look at what this code does:

(1): #[tokio::main] is a macro that abstracts away the basic setup logic of starting a thread pool and setting up tokio itself.

(2): A Router is axum’s way to route requests. You will later add routes here to map different endpoints to functions.

(3): Server is the actual implementation of axum’s HTTP server. It takes a Router and calls it for every incoming request it receives.

This is a basic implementation of a web server that does not provide any method you can call remotely, but it’s a perfect foundation to add more endpoints. Speaking of endpoints, you should probably add one you will need nevertheless (as long as your service will somehow run inside a container on Kubernetes, for example).

Open Cargo.toml once again and add serde to your dependencies like this:

[package]edition = "2021"name = "axum-graphql"version = "0.1.0"

[dependencies]axum = "0.6.20"serde = { version = "1.0.188", features = ["derive"] }tokio = { version = "1.32.0", features = ["full"] }serde is the default choice for any Rust application that wants to deal with serialization and deserialization. As your endpoint will respond with JSON, you need this crate, and its “derive” feature enables you to add full JSON support for any of your structs with the derive macro.

Now that serde is a dependency of your project create a new folder src/routes and add a file mod.rs to it. Open the file and add the following content:

use axum::{http::StatusCode, response::IntoResponse, Json};use serde::Serialize;

#[derive(Serialize)] // (1)struct Health { // (2) healthy: bool}

pub(crate) async fn health() -> impl IntoResponse { // (3) let health = Health { healthy: true };

(StatusCode::OK, Json(health)) // (4)}Here is another quick look at what is actually happening in the code above:

(1): derive(Serialize) automatically implements all logic for you such that the struct below can be serialized (into JSON in this case).

(2): Health is just a basic struct for this particular use case. It contains one property and that’s it.

(3): This is the soon-to-be endpoint method. It needs no parameters and returns axum’s IntoResponse, a trait that marks structs that can be serialized into a response axum can understand and return to your users.

(4): This tuple response is one of a few ways you can return responses in axum. In this case, it’s only an HTTP status code combined with a serialized version of your Health struct.

Next, you need to register the endpoint method so that axum can pick it up and call it whenever someone makes an HTTP request to a specific path. In this case, the method will be available under the path /health.

Open src/main.rs and register your new method like this:

use crate::routes::health;use axum::{routing::get, Router, Server};

mod routes;

#[tokio::main]async fn main() { let app = Router::new().route("/health", get(health));

Server::bind(&"0.0.0.0:8000".parse().unwrap()) .serve(app.into_make_service()) .await .unwrap();}route("/health", get(health)) tells axum that any HTTP GET requests to /health should call your method health. You can try this out by starting your service through cargo and using curl or another tool to send a test request quickly:

❯ cargo run Compiling axum-graphql v0.1.0 (/Users/oliverjumpertz/projects/axum-graphql) Finished dev [unoptimized + debuginfo] target(s) in 1.13s Running `target/debug/axum-graphql`

❯ curl http://localhost:8000/health{"healthy":true}If you don’t have a tool like curl at hand, don’t worry. Simply open your browser and navigate to http://localhost:8000/health, which should look something like this:

Congratulations, you have just set up a pretty basic web server and implemented your first endpoint. That’s no GraphQL API yet, though. This is why you will add GraphQL in the next step and implement a pretty basic schema.

Adding GraphQL

You currently have a basic web server in place. Now it’s time to add GraphQL capabilities to it.

Conceptually, GraphQL (over HTTP) is nothing else than an additional processor sitting on top of a normal HTTP endpoint. It expects a query (and variables) to be sent over an HTTP POST request (or GET with query parameters), passes this data to the query processor, and then returns the response to the user. This is why adding GraphQL to any Rust web server is relatively straightforward.

In this guide, you will use async-graphql. It is a Rust crate that supports the latest GraphQL specification and additionally supports Apollo Federation (including v2) and more.

Open Cargo.toml once again, and add two more dependencies:

- async-graphql

- async-graphql adds GraphQL support to your Rust service

- async-graphql-axum

- An integration crate that comes with everything async-graphql needs to work together with axum

Your Cargo.toml should now look like this:

[package]edition = "2021"name = "axum-graphql"version = "0.1.0"

[dependencies]async-graphql = "6.0.6"async-graphql-axum = "6.0.6"axum = "0.6.20"serde = { version = "1.0.188", features = ["derive"] }tokio = { version = "1.32.0", features = ["full"] }Now that you have async-graphql in your project, you can start to add GraphQL support to your service.

A GraphQL service needs some kind of schema and model to work. In async-graphql’s case, you can use a code-first approach to schema design. This means you create the schema right inside your code, add macros to it, and the crate correctly maps everything for you.

Create a new folder src/model and add a file mod.rs to it. Then add the following content (as your first model/schema):

use async_graphql::{Context, Object, Schema};use async_graphql::{EmptyMutation, EmptySubscription};

pub(crate) type ServiceSchema = Schema<QueryRoot, EmptyMutation, EmptySubscription>;

pub(crate) struct QueryRoot; // (1)

#[Object] // (2)impl QueryRoot { // (3) async fn hello(&self, _ctx: &Context<'_>) -> &'static str { // (4) "Hello world" }}Let’s quickly go over what this code actually does:

(1): This is the Query object within your schema. It is the root of all queries users can use at your service.

(2): The Object macro wires your Rust struct together with the underlying framework logic of async-graphql.

(3): The implementation of QueryRoot contains all queries your service supports.

(4): hello is your first query. It just returns a static string for now.

It is time to create the handler functions to process incoming GraphQL queries. Open src/routes/mod.rs and add the following code:

use crate::model::ServiceSchema;use async_graphql::http::{playground_source, GraphQLPlaygroundConfig};use async_graphql_axum::{GraphQLRequest, GraphQLResponse};use axum::{ extract::Extension, http::StatusCode, response::{Html, IntoResponse}, Json,};use serde::Serialize;

#[derive(Serialize)]struct Health { healthy: bool,}

pub(crate) async fn health() -> impl IntoResponse { let health = Health { healthy: true };

(StatusCode::OK, Json(health))}

pub(crate) async fn graphql_playground() -> impl IntoResponse { Html(playground_source( // (1) GraphQLPlaygroundConfig::new("/").subscription_endpoint("/ws"), ))}

pub(crate) async fn graphql_handler( Extension(schema): Extension<ServiceSchema>, // (2) req: GraphQLRequest,) -> GraphQLResponse { schema.execute(req.into_inner()).await.into() // (3)}You are slowly getting closer to finishing basic GraphQL support for your Rust service, but there are a few new things for you to understand first:

(1): async-graphql comes with a full implementation of a GraphQL Playground. Thankfully, you can just call it as a function and wrap it within axum’s Html helper that takes care of returning everything correctly.

(2): The graphql handler function does receive both the request and, even more importantly, an instance of the schema you designed and implemented. Extension is a special helper by axum, which allows you to add data and other context-specific things to your handler functions.

(3): Remember when I told you that GraphQL over HTTP is conceptually nothing but an API endpoint with a special processor? This is exactly what happens here. The actual logic is implemented within schema.execute(...) and is thus the only call you need to perform here.

Now that you have those new handlers, it’s time to integrate them. Open src/main.rs again and register both the playground and the handler like this:

use crate::model::QueryRoot;use crate::routes::{graphql_handler, graphql_playground, health};use async_graphql::{EmptyMutation, EmptySubscription, Schema};use axum::{extract::Extension, routing::get, Router, Server};

mod model;mod routes;

#[tokio::main]async fn main() { let schema = Schema::build(QueryRoot, EmptyMutation, EmptySubscription).finish();

let app = Router::new() .route("/", get(graphql_playground).post(graphql_handler)) .route("/health", get(health)) .layer(Extension(schema)); // (1)

Server::bind(&"0.0.0.0:8000".parse().unwrap()) .serve(app.into_make_service()) .await .unwrap();}There is only one thing in the code above that you need to understand:

(1): You somehow need your compiled schema accessible in your endpoint. By providing axum with a layer, you do exactly this. The schema you build a few lines above is now passed into axum so that you can access it within your GraphQL handler.

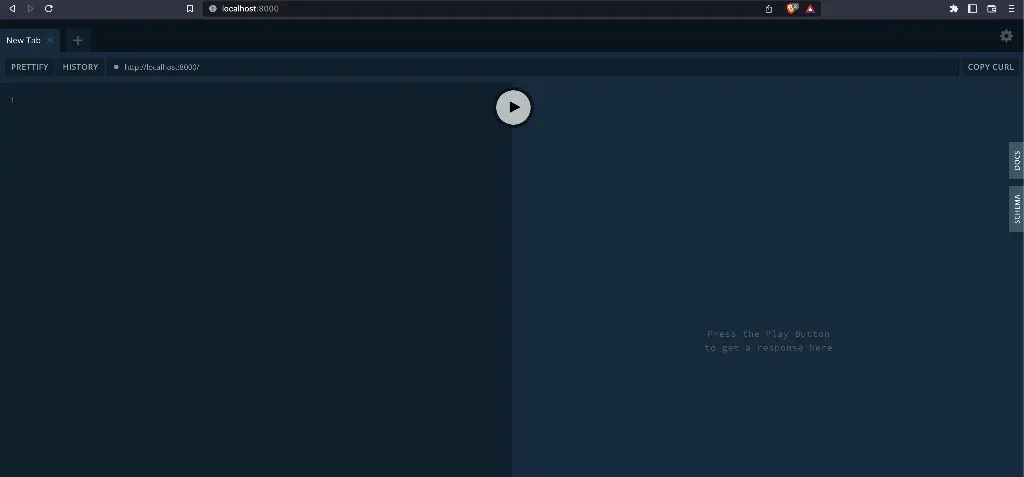

It’s now time to test whether your GraphQL API works. Start your service with cargo run, open a browser and navigate to http://localhost:8000. You should be represented with a view similar to this:

This is the Playground. You can enter queries here and play around (hence the name) with your API.

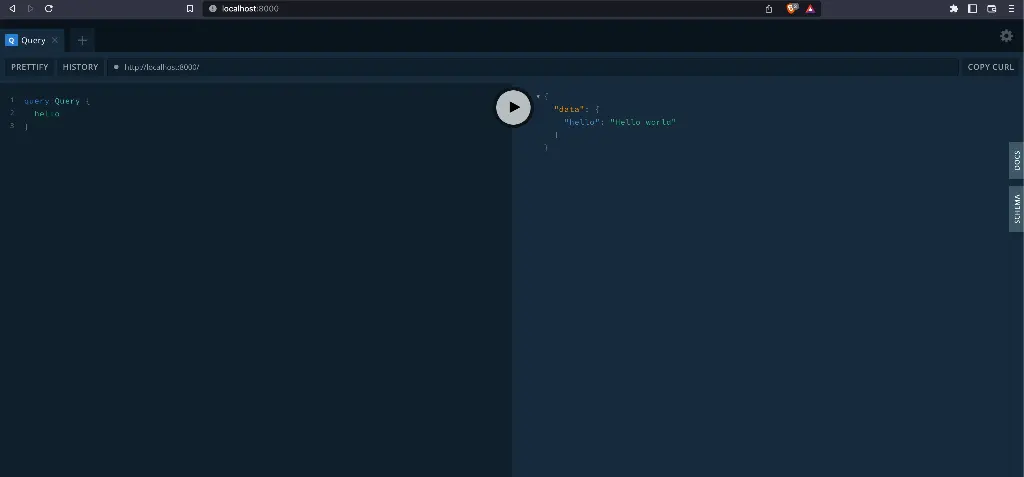

Try running the query you previously implemented to test whether your implementation works. Enter the query on the left side and click on the play button:

When you enter queries on the left side, you can press the play button and run those queries. The response then shows up on the right side.

If your service successfully returns the response you see in the image above, you have finished setting up a basic GraphQL API with Rust. You will next take care of your API’s observability by adding metrics to your service.

Adding Metrics

Most microservices run in Docker containers that are deployed to Kubernetes nowadays. Usually, someone must actually take a look at how these services perform when they are deployed. Metrics are one way to make your service observable. By emitting certain key metrics, you get a better clue about how your GraphQL API performs and what problems it might encounter while running and processing requests. A service like Prometheus regularly scrapes an endpoint that exposes metrics, and those metrics can then be used to create graphs in tools like Grafana.

Open your Cargo.toml again and add two new dependencies:

- metrics

- metrics provides a facade for collecting metrics. It takes care of collecting metrics and storing them internally

- metrics-exporter-prometheus

- This crate adds Prometheus-compatible capabilities to your service

Overall, the file should now look like this:

[package]edition = "2021"name = "axum-graphql"version = "0.1.0"

[dependencies]async-graphql = "6.0.6"async-graphql-axum = "6.0.6"axum = "0.6.20"metrics = "0.21.1"metrics-exporter-prometheus = "0.12.1"serde = { version = "1.0.188", features = ["derive"] }tokio = { version = "1.32.0", features = ["full"] }With these two dependencies added, you can begin setting up your metrics. The integration is relatively straightforward. Conceptually, metrics require some form of registry where metrics can be registered. Whenever you want to work with these registered metrics, you need to retrieve them from the registry, add a new value, or increment a counter (or whatever else).

This means that at least you must set up the metrics registry once and integrate it into axum. Additionally, you also want to record some metrics, which is why you will also add some of the most basic metrics:

- Counting the number of requests against your API

- Recording how long each request actually takes

Create a new folder src/observability and add a file metrics.rs. Then add the following code:

use axum::{extract::MatchedPath, http::Request, middleware::Next, response::IntoResponse};use metrics_exporter_prometheus::{Matcher, PrometheusBuilder, PrometheusHandle};use std::time::Instant;

const REQUEST_DURATION_METRIC_NAME: &str = "http_requests_duration_seconds";

pub(crate) fn create_prometheus_recorder() -> PrometheusHandle { // (1) const EXPONENTIAL_SECONDS: &[f64] = &[ 0.005, 0.01, 0.025, 0.05, 0.1, 0.25, 0.5, 1.0, 2.5, 5.0, 10.0, ];

PrometheusBuilder::new() .set_buckets_for_metric( // (2) Matcher::Full(REQUEST_DURATION_METRIC_NAME.to_string()), EXPONENTIAL_SECONDS, ) .unwrap_or_else(|_| { panic!( "Could not initialize the bucket for '{}'", REQUEST_DURATION_METRIC_NAME ) }) .install_recorder() .expect("Could not install the Prometheus recorder")}

pub(crate) async fn track_metrics<B>(req: Request<B>, next: Next<B>) -> impl IntoResponse { // (3) let start = Instant::now(); let path = if let Some(matched_path) = req.extensions().get::<MatchedPath>() { matched_path.as_str().to_owned() } else { req.uri().path().to_owned() }; let method = req.method().clone();

let response = next.run(req).await;

let latency = start.elapsed().as_secs_f64(); let status = response.status().as_u16().to_string();

let labels = [ ("method", method.to_string()), ("path", path), ("status", status), ];

metrics::increment_counter!("http_requests_total", &labels); // (4) metrics::histogram!(REQUEST_DURATION_METRIC_NAME, latency, &labels);

response}To give you a better idea of what exactly happens in the code above, let’s take a closer look again:

(1): create_prometheus_recorder is just a simple function that returns a PrometheusHandle. The latter is a construct of the metrics crate. Explained in simple terms: It is a way to access the actual recorder, the registry where all metrics you record are stored.

(2): A bucket is a construct that is needed for so-called histograms. You can imagine it like real buckets that are placed next to each other. Whenever you record a new entry for the histogram, it is automatically sorted into the bucket it fits in. This means that you don’t get exact values, though. Every value is sorted into the smallest bucket it fits into.

(3): This function’s task is to record the duration a request to your service takes. You will register it as a middleware for axum in a second. Whenever a request hits your API, this code is run, collects the duration the request took, and also increments a counter, so you can track how many requests your API has already served.

(4): These macros make it a little easier to work with metrics. You would usually have to get an instance of the metrics registry and add metrics to or retrieve existing metrics from it. Thanks to these macros, you only have to do exactly one call and don’t have to worry about further implementation details.

Before you can use this submodule, though, you need to create another new file src/observability/mod.rs and add the following content:

pub(crate) mod metrics;

To integrate your newly created module into your service, you have to make a few more changes to your main.rs. Open the file and add the following code, which should make the whole file look as follows:

use crate::model::QueryRoot;use crate::observability::metrics::{create_prometheus_recorder, track_metrics};use crate::routes::{graphql_handler, graphql_playground, health};use async_graphql::{EmptyMutation, EmptySubscription, Schema};use axum::{extract::Extension, middleware, routing::get, Router, Server};use std::future::ready;

mod model;mod observability;mod routes;

#[tokio::main]async fn main() { let schema = Schema::build(QueryRoot, EmptyMutation, EmptySubscription).finish();

let prometheus_recorder = create_prometheus_recorder();

let app = Router::new() .route("/", get(graphql_playground).post(graphql_handler)) .route("/health", get(health)) .route("/metrics", get(move || ready(prometheus_recorder.render()))) // (1) .route_layer(middleware::from_fn(track_metrics)) // (2) .layer(Extension(schema));

Server::bind(&"0.0.0.0:8000".parse().unwrap()) .serve(app.into_make_service()) .await .unwrap();}Here are a few more explanations:

(1): This line looks a little clumsy, but in the end, it does nothing more than wire your recorder to an HTTP GET request at /metrics.

(2): This integrates your tracking function as an axum middleware.

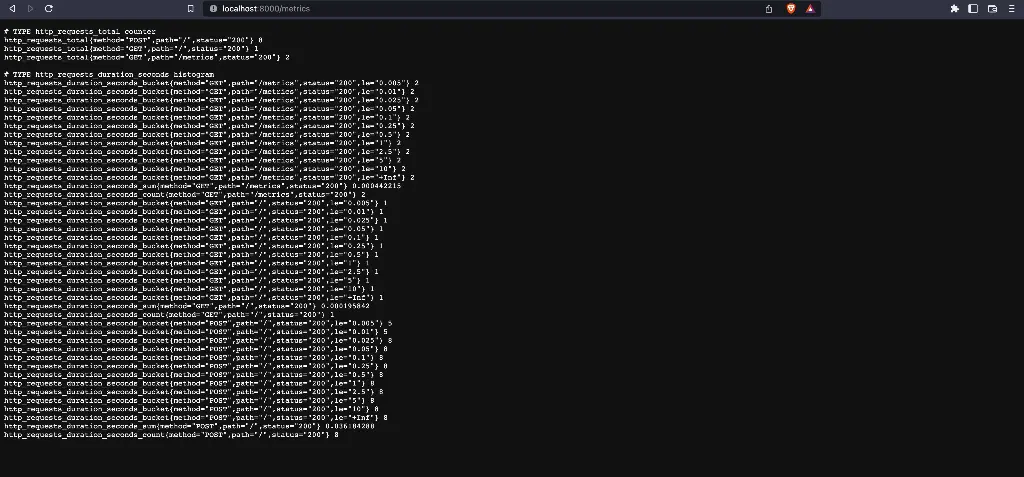

To test whether you successfully integrated metrics into your service, start your service with cargo run, open your browser again, navigate to http://localhost:8000 and fire a few queries. After that, navigate to http://localhost:8000/metrics, and you should see a few metrics returned like seen below:

Prometheus-style metrics returned by your newly integrated metrics endpoint.

From now on, you can use the metrics crate’s macros to register new metrics or add to existing ones. What you track where is totally up to you. Just remember that it is often better just to add a new metric and remove it again should you realize you don’t need it than to remain blind.

Adding Tracing

Tracing is another way to improve the observability of your GraphQL API. Tracing usually tracks the execution of code paths by putting some form of context around it, called spans. Within these spans, certain events can occur. All these events are then attached to their respective span. These spans, in return, are attached to their parent span up to the root span. If you know the id of the root span, you can easily follow any request or execution of code.

Jaeger is an end-to-end distributed tracing solution. It has its own frontend and multiple supporting services that help collect or receive traces by microservices. Nowadays, it builds on OpenTelemetry, a standard for distributed tracing.

There are implementations of OpenTelemetry for most languages, and Rust is no different. Open your Cargo.toml again and add the following dependencies:

- tracing

- Implements a facade (like metrics) and provides macros that make it easy to emit traces

- tracing-opentelemetry

- Adds support for OpenTelemetry

- tracing-subscriber

- This crate consists of utilities that make it easier to integrate multiple output channels like Jaeger or logging to stdout

- opentelemetry

- This crate implements the OpenTelemetry standard for clients

- opentelemetry-jaeger

- Adds interoperability for Jaeger

- dotenv

- This crate does not have much to do with tracing itself, but always having to let a Jaeger agent or collector run can hinder local development. You will use dotenv to quickly enable or disable tracing

After you added the dependencies, your Cargo.toml should look like this:

[package]edition = "2021"name = "axum-graphql"version = "0.1.0"

[dependencies]async-graphql = "6.0.6"async-graphql-axum = "6.0.6"axum = "0.6.20"dotenv = "0.15.0"metrics = "0.21.1"metrics-exporter-prometheus = "0.12.1"opentelemetry = { version = "0.20.0", features = ["rt-tokio"] }opentelemetry-jaeger = { version = "0.19.0", features = ["rt-tokio"] }serde = { version = "1.0.188", features = ["derive"] }tokio = { version = "1.32.0", features = ["full"] }tracing = "0.1.37"tracing-opentelemetry = "0.21.0"tracing-subscriber = { version = "0.3.17", features = ["std", "env-filter"] }Now that you have another set of dependencies in your project, it’s time to get back to code. You need to create an instance of a tracer that you can use to actually emit tracing events. In this case, you will create a jaeger agent (usually a sidecar running next to your service pod on Kubernetes) pipeline that sends these events to the agent. It comes in the form of a builder that returns a Tracer in the end that you can later use.

Create a new file in src/observability named tracing.rs, and add the following code:

use opentelemetry::sdk::trace::{self, Sampler};use opentelemetry::{ global, runtime::Tokio, sdk::propagation::TraceContextPropagator, sdk::trace::Tracer,};use std::env;

struct JaegerConfig { // (1) jaeger_agent_host: String, jaeger_agent_port: String, jaeger_tracing_service_name: String,}

pub fn create_tracer_from_env() -> Option<Tracer> { let jaeger_enabled: bool = env::var("JAEGER_ENABLED") .unwrap_or_else(|_| "false".into()) .parse() .unwrap();

if jaeger_enabled { let config = get_jaeger_config_from_env(); Some(init_tracer(config)) } else { None }}

fn init_tracer(config: JaegerConfig) -> Tracer { global::set_text_map_propagator(TraceContextPropagator::new()); // (2) opentelemetry_jaeger::new_agent_pipeline() // (3) .with_endpoint(format!( "{}:{}", config.jaeger_agent_host, config.jaeger_agent_port )) .with_auto_split_batch(true) .with_service_name(config.jaeger_tracing_service_name) .with_trace_config(trace::config().with_sampler(Sampler::AlwaysOn)) .install_batch(Tokio) .expect("pipeline install error")}

fn get_jaeger_config_from_env() -> JaegerConfig { JaegerConfig { jaeger_agent_host: env::var("JAEGER_AGENT_HOST").unwrap_or_else(|_| "localhost".into()), jaeger_agent_port: env::var("JAEGER_AGENT_PORT").unwrap_or_else(|_| "6831".into()), jaeger_tracing_service_name: env::var("TRACING_SERVICE_NAME") .unwrap_or_else(|_| "axum-graphql".into()), }}This is a little more code again, so let’s quickly go over its most important parts:

(1): This is only a helper struct to hold the properties you need to configure the tracer. In this case, it’s a way to set the host and port of an agent, as well as the name its traces are registered with (the defaults are usually sufficient when your service runs in Kubernetes with a Jaeger sidecar attached).

(2): Traces can be propagated between multiple services. This line just ensures that tracing is propagated by the traceparent header (W3C Trace Context Propagator. You can read more about it here.)

(3): The pipeline is the actual core logic to create a tracer. In this case, a so-called agent pipeline expects to send traces to a Jaeger agent. It is configured with a few more settings, like setting an explicit service name (to find your service’s traces in Jaeger’s UI) and setting the Sampler to always on, which means that no spans are thrown away, and everything is stored.

Next, you need to add your newly created module to src/observability/mod.rs like this:

pub(crate) mod metrics;pub(crate) mod tracing;

Now that your new module is accessible, it's time to integrate everything into your service. Open `src/main.rs` once again and add the following code:

use crate::model::QueryRoot;use crate::observability::metrics::{create_prometheus_recorder, track_metrics};use crate::observability::tracing::create_tracer_from_env;use crate::routes::{graphql_handler, graphql_playground, health};use async_graphql::{EmptyMutation, EmptySubscription, Schema};use axum::{extract::Extension, middleware, routing::get, Router, Server};use dotenv::dotenv;use std::future::ready;use tracing::info;use tracing_subscriber::layer::SubscriberExt;use tracing_subscriber::util::SubscriberInitExt;use tracing_subscriber::Registry;

mod model;mod observability;mod routes;

#[tokio::main]async fn main() { dotenv().ok(); // (1)

let schema = Schema::build(QueryRoot, EmptyMutation, EmptySubscription).finish();

let prometheus_recorder = create_prometheus_recorder();

let registry = Registry::default().with(tracing_subscriber::fmt::layer().pretty()); // (2)

match create_tracer_from_env() { // (3) Some(tracer) => registry .with(tracing_opentelemetry::layer().with_tracer(tracer)) .try_init() .expect("Failed to register tracer with registry"), None => registry .try_init() .expect("Failed to register tracer with registry"), }

info!("Service starting"); // (4)

let app = Router::new() .route("/", get(graphql_playground).post(graphql_handler)) .route("/health", get(health)) .route("/metrics", get(move || ready(prometheus_recorder.render()))) .route_layer(middleware::from_fn(track_metrics)) .layer(Extension(schema));

Server::bind(&"0.0.0.0:8000".parse().unwrap()) .serve(app.into_make_service()) .await .unwrap();}There is some new code to understand again, so let’s go over the important parts:

(1): This is everything you need to do to set up dotenv. You will create a small dotenv file in a few seconds to quickly enable or disable the Jaeger exporter when developing locally.

(2): The Registry is a way to register so-called tracing layers. In this case, a tracer that logs to stdout is added. This ensures that you always have some log messages for debugging purposes.

(3): The Jaeger export can be disabled. This is why you need to check whether there is a tracer for you to register additionally. If not, the service just runs with logging to stdout. If Jaeger is enabled, both stdout logging and export to a Jaeger agent get registered.

(4): This macro is one of the ways you can log or trace specific events.

Now that tracing is integrated creating some traces is probably a good idea. One place where a trace span definitely belongs is around any call to your GraphQL endpoint. You can create a root span that all other spans or events you create while processing GraphQL requests are attached to.

Jump back into src/routes/mod.rs and change the code to the following:

use crate::model::ServiceSchema;use async_graphql::http::{playground_source, GraphQLPlaygroundConfig};use async_graphql_axum::{GraphQLRequest, GraphQLResponse};use axum::{ extract::Extension, http::StatusCode, response::{Html, IntoResponse}, Json,};use opentelemetry::trace::TraceContextExt;use serde::Serialize;use tracing::{info, span, Instrument, Level};use tracing_opentelemetry::OpenTelemetrySpanExt;

#[derive(Serialize)]struct Health { healthy: bool,}

pub(crate) async fn health() -> impl IntoResponse { let health = Health { healthy: true };

(StatusCode::OK, Json(health))}

pub(crate) async fn graphql_playground() -> impl IntoResponse { Html(playground_source( GraphQLPlaygroundConfig::new("/").subscription_endpoint("/ws"), ))}

pub(crate) async fn graphql_handler( Extension(schema): Extension<ServiceSchema>, req: GraphQLRequest,) -> GraphQLResponse { let span = span!(Level::INFO, "graphql_execution"); // (1)

info!("Processing GraphQL request");

let response = async move { schema.execute(req.into_inner()).await } // (2) .instrument(span.clone()) .await;

info!("Processing GraphQL request finished");

response .extension( // (3) "traceId", async_graphql::Value::String(format!( "{}", span.context().span().span_context().trace_id() )), ) .into()}Let’s see what happens in the most important parts of the code above:

(1): A span is a context, so to speak. Within a span all events and further spans are attached to this span. This helps to create blocks of events that belong together semantically and technically.

(2): To understand why this async block is necessary, you need to understand how async functions work. At any point of execution, an async function can halt its execution. A span’s lifetime is bound to the lifetime of its guard. When you enter a span, there is a guard returned. When this guard is dropped, the span is exited. When an async function’s execution is halted, the guard is not dropped; thus, other code would emit traces within this span. This is why you need to instrument the async block with the span.

(3): Having spans and traces is great, but it’s also a good idea to return the trace id so that users can give you something you can search for should someone encounter an error. This is why the trace id is put into the GraphQL response’s extensions.

Remember that we can disable Jaeger tracing? The default is already false, but if you want to enable it locally, you must start your service with the environment variable set to true. A better way is to use a .env file that you can quickly open, adjust values in, and then start your service again.

Quickly add a new file .env in the root of your project and add the following content that ensures that your jaeger exporter is enabled (don’t forget to add .env to your .gitignore. You don’t want secrets to ever leak into a public git repository!):

JAEGER_ENABLED=trueYou are nearly finished. There is only one last thing you need to do, but let’s first look at the process of tracing at runtime (simplified):

- Your code emits traces

- These traces are collected

- More traces are collected

- Traces are regularly sent over to an agent

This means that there is a short window between collecting traces and shutting down your service, where some traces could be lost. This is especially the case when your GraphQL API runs within Kubernetes, where pods can be rescheduled at any time. Gladly, axum has a shutdown hook, and OpenTelemetry has a function to explicitly trigger the shutdown of your tracer that sends any traces left off before they get lost.

Open src/main.rs and add the following code to enable your service and traces to shut down gracefully:

use crate::model::QueryRoot;use crate::observability::metrics::{create_prometheus_recorder, track_metrics};use crate::observability::tracing::create_tracer_from_env;use crate::routes::{graphql_handler, graphql_playground, health};use async_graphql::{EmptyMutation, EmptySubscription, Schema};use axum::{extract::Extension, middleware, routing::get, Router, Server};use dotenv::dotenv;use std::future::ready;use tokio::signal;use tracing::info;use tracing_subscriber::layer::SubscriberExt;use tracing_subscriber::util::SubscriberInitExt;use tracing_subscriber::Registry;

mod model;mod observability;mod routes;

async fn shutdown_signal() { // (1) let ctrl_c = async { signal::ctrl_c() .await .expect("failed to install Ctrl+C handler"); };

#[cfg(unix)] let terminate = async { signal::unix::signal(signal::unix::SignalKind::terminate()) .expect("failed to install signal handler") .recv() .await; };

#[cfg(not(unix))] let terminate = std::future::pending::<()>();

tokio::select! { _ = ctrl_c => {}, _ = terminate => {}, }

opentelemetry::global::shutdown_tracer_provider();}

#[tokio::main]async fn main() { dotenv().ok();

let schema = Schema::build(QueryRoot, EmptyMutation, EmptySubscription).finish();

let prometheus_recorder = create_prometheus_recorder();

let registry = Registry::default().with(tracing_subscriber::fmt::layer().pretty());

match create_tracer_from_env() { Some(tracer) => registry .with(tracing_opentelemetry::layer().with_tracer(tracer)) .try_init() .expect("Failed to register tracer with registry"), None => registry .try_init() .expect("Failed to register tracer with registry"), }

info!("Service starting");

let app = Router::new() .route("/", get(graphql_playground).post(graphql_handler)) .route("/health", get(health)) .route("/metrics", get(move || ready(prometheus_recorder.render()))) .route_layer(middleware::from_fn(track_metrics)) .layer(Extension(schema));

Server::bind(&"0.0.0.0:8000".parse().unwrap()) .serve(app.into_make_service()) .with_graceful_shutdown(shutdown_signal()) // (2) .await .unwrap();}Let’s once again take a look at the important lines in the code above:

(1): This function does exactly two things: It first waits on one of two possible shutdown signals, and as soon as it receives one, it triggers the shutdown of the tracing system.

(2): with_graceful_shutdown receives a future. If that future resolves, the service is shut down. This is exactly the case when the function receives a termination signal.

It’s now time to test whether everything works as expected. Jaeger gladly offers an all-in-one Docker image that has everything you need. This is, of course, not how it usually should be used but enough to test whether your service emits traces as expected.

Open a terminal and execute the following command:

❯ docker run -d --name jaeger -e COLLECTOR_ZIPKIN_HTTP_PORT=9411 -p 5775:5775/udp -p 6831:6831/udp -p 6832:6832/udp -p 5778:5778 -p 16686:16686 -p 14268:14268 -p 9411:9411 jaegertracing/all-in-one:1.6This starts a docker container with a Jaeger agent, Jaeger itself, a frontend, and a little more.

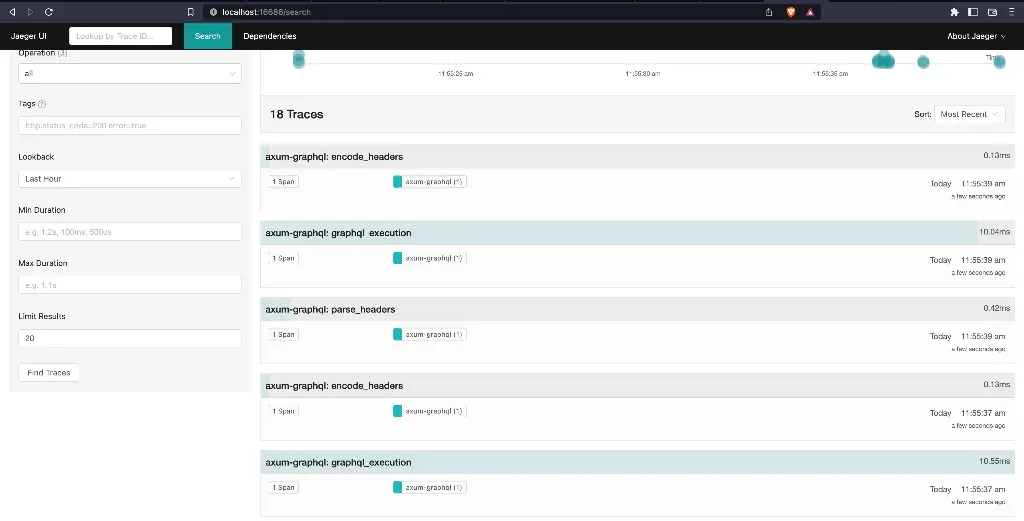

Next, start your service with cargo run and perform a few requests through the GraphQL Playground. After this, open a new browser tab and navigate to http://localhost:16686/search. Select axum-graphql (or whatever else you called your service) from the dropdown on the left side and click on “Find Traces”. This should present you with a view like this:

If what you see is similar to the image above, congratulations. The basic setup is now complete, and there is only one thing left to do: Containerize your GraphQL API with Rust.

Containerizing your GraphQL API

Most services get containerized nowadays, and your GraphQL API should be no different. Time to create a container with your service that you can deploy nearly everywhere.

The first thing you should create is a .dockerignore file. This ensures unnecessary files are not picked up by the Docker daemon. Your target folder, for example, is useless if you don’t work on a Linux machine yourself.

Drop the following content into your .dockerignore:

.git/.envThe next thing you need is a Dockerfile. Create one and add the following lines:

FROM --platform=linux/amd64 lukemathwalker/cargo-chef:latest-rust-1.65.0 AS chef # (1)

WORKDIR /app

FROM --platform=linux/amd64 chef AS planner

COPY . .

RUN cargo chef prepare --recipe-path recipe.json

FROM --platform=linux/amd64 chef AS builder

COPY --from=planner /app/recipe.json recipe.json

RUN cargo chef cook --release --recipe-path recipe.json

COPY . .

RUN cargo build --release

FROM debian:bookworm-slim

RUN mkdir -p /app

RUN groupadd -g 999 appuser && # (2)useradd -r -u 999 -g appuser appuser

USER appuser

COPY --from=builder /app/target/release/axum-graphql /app

WORKDIR /app

ENV JAEGER_ENABLED=true

EXPOSE 8000

ENTRYPOINT ["./axum-graphql"]That’s it already. There are only two things that probably need a dedicated explanation:

(1): cargo-chef is a tool that makes it easier to use Docker’s layer system to your advantage. This basically speeds up the process of building your container drastically. You can learn more about cargo-chef here.

(2): It’s never a good idea to run any software inside a container as root. A new user is quickly created and spares you (most probably) from certain attacks.

You can now build your container with docker build -t axum-graphql:latest . and quickly start it with docker run -p 8000:8000 axum-graphql:latest. Then open your browser again, and navigate to http://localhost:8000. You should see the Playground running and be able to send off a few queries.

If all works, you are done. Congratulations!

A Quick Recap

Time to quickly recap what you have achieved so far.

You have:

- Set up a new Rust project

- Added formatting and linting

- Created a basic web server

- Added a pretty basic but working GraphQL API on top

- Integrated metrics into your service and registered (and collected) some of the most important ones

- Added tracing on top and created a root span

- Containerized your application so you can deploy it nearly anywhere

That’s quite an achievement. Congratulations once again!

What’s Next?

You can play around with your new GraphQL API freely from now on. If you have any plans for an API you want to implement, you can take a look at the async-graphql book. It answers most questions you will probably have while implementing a more advanced GraphQL API with Rust. There are still quite a few things you can learn about GraphQL and Rust.

If you want to go one step further, create a Helm chart and try to deploy your service to a real Kubernetes cluster (or a local version like minikube). You will see that this is also another challenging task that teaches you quite a lot.